Data Availability

The Overlooked Pillar of Scalability (Part 1)

tl;dr

Data availability refers to on-chain transaction data being accessible to all nodes for validation and consensus. It's the overlooked pillar enabling blockchain scalability and decentralization.

In this Part 1 of Part 3, we explore why data availability matters by diving deep into:

Transaction lifecycles in PoW and PoS - Shows where availability bottlenecks emerge during validation and consensus stages.

Comparing Ethereum and rollups - Ethereum provides inherent on-chain availability. Rollups face the "data availability problem" of incentivizing sequencers.

Rollup cryptography - Fraud and validity proofs fundamentally rely on accessible data to function securely.

Users require availability to verify balances, submit transactions, and audit independently. For rollups, availability enables open participation, state derivation, and security. It makes scalability about data schemes, not just throughput.

Part 1 establishes why data availability serves as the overlooked pillar upholding blockchain scalability, security, and meaningful decentralization.

Parts 2 and 3 will delve into solving the data availability problem and the layers building to solve the very problem.

Data availability. It's a term we throw around a lot in conversations, but what does it actually mean? And why does it matter so much for rollups and danksharding that promise to scale decentralized systems?

In Part 1 of this series, we'll break data availability down to first principles and build up an understanding of why it's so pivotal for blockchains and Layer 2 innovations.

We'll start by looking at what data availability refers to at a high level. Then we'll examine how it fits into transaction lifecycles and validation in both proof-of-work and proof-of-stake networks. This will provide context on where availability bottlenecks can emerge.

Next, we'll contrast availability designs in Ethereum versus rollups. This highlights the "data availability problem" that arises in layer 2 systems and sets the stage for Part 2 where we'll dive deeper into that issue.

Finally, Part 3 will cover the data availability layers in detail.

Our aim is to take a topic that seems esoteric and make its importance clear and intuitive. This topic matters because data availability is the foundation for scalability, security, and meaningful decentralization.

Introduction

Data availability refers to transaction data and blockchain state being available to all nodes in a decentralized network. This is essential for nodes to be able to validate transactions, reconstruct an accurate state, and produce new blocks. Without data availability, blockchains cannot function securely or permissionlessly.

In simpler terms, Data availability refers to guaranteeing that on-chain data is reliably accessible to all network participants in a blockchain system. It is a critical component of blockchain scalability and decentralization.

In a blockchain like Ethereum, transactions are processed by a decentralized network of validators who propose blocks. For each block, the validator must propagate two things - the block header and the full block data. The header contains metadata and is small, while the full data for all the transactions can be large. Broadcasting the full block data to the entire network presents a bandwidth bottleneck that limits scalability. Data availability schemes aim to alleviate this by spreading out the responsibility of storing and transmitting the full data.

Validators divide the block data into erasure-coded fragments and distribute them to different validator nodes. All validators can then cryptographically verify that the full data is available by sampling the fragments from each other. But they don't all need to hold the full data themselves.

If a validator cannot produce the fragment samples they are challenged on, they are provably guilty of withholding data and can be slashed or penalized. This sybil-proof mechanism economically compels validators to retain the data fragments. Meanwhile, normal network nodes only need to receive the small block headers to remain synchronized. They know validators are retaining the full block data via the verification scheme. Users who do want the full data can retrieve fragments from the validators as needed.

Lack of data availability leads to issues like channels not having globally ordered updates, plasma chains becoming permissioned if data is withheld, and the inability to determine account balances and reconstruct state in systems using zero knowledge proofs without the full transaction history. Data availability is thus considered the core challenge for blockchains to solve.

Imagine you have a big puzzle with lots of pieces. Now, if some pieces are hidden, and you can't find them, you can't complete the puzzle, right? Data Availability Layers are like making sure that all the puzzle pieces are on the table for everyone to see and use. This way, everyone can help put the puzzle together, and we can be sure that the picture we make is the right one.

Before we dive deep into data availability, it’s important we understand the full transaction lifecycle in proof-of-stake (PoS) & proof-of-work (PoW) blockchains.

Proof-of-work (PoW)

Transaction Creation: The user creates a new transaction containing the sender, receiver, amount, and digital signature. This generates a valid transaction message.

Transaction Broadcast: The user's node broadcasts the transaction to all peer nodes in the blockchain network so it can be verified and added to the chain.

Transaction Verification: The nodes that receive the broadcast transaction verify it by checking the signature, account balances, etc.

Entering Transaction Pool: If verified, the transaction enters the transaction pool where it waits to be included in a block.

Transaction Rejected: If transaction verification fails, nodes reject it and do not add it to the pool.

Mining: Miners assemble valid transactions from the pool and perform proof-of-work to solve the cryptographic puzzle.

Block Broadcast: The first miner to solve the puzzle broadcasts the new block containing the transactions to all nodes.

Block Verification: Nodes verify the new block meets consensus rules before accepting it as valid.

Blockchain Update: If the block is valid, nodes add it to their blockchain copy, confirming the transactions.

Transaction Confirmed: The user node receives confirmation once the transaction is added to the updated blockchain.

Retry Mining: If block verification fails, the block is invalid and miners retry proof-of-work mining to create a new valid block.

The data availability of a PoW blockchain is mainly reflected in two stages:

Transaction Verification Stage:

This is where nodes need to obtain relevant data to verify the validity of the transaction.

Data needs to include details like the sender's account balance, signature, past transaction inputs, etc.

Nodes may not have all this data locally if they only maintain partial state.

They are dependent on retrieving the required verification data from other nodes within a specific time limit.

If retrieval takes too long, nodes cannot validate the transaction properly, reducing data availability.

Factors like network propagation delays, node performance, data redundancy affect availability.

Block Verification Stage:

After a new block is broadcast, nodes need to retrieve data to verify the block.

This includes the block header, transaction details, validator signatures in Proof-of-Stake, etc.

Nodes need the verification data within a time limit to reach consensus and accept the block.

If block data retrieval is delayed, consensus is slowed down and availability reduced.

Retrieval time is impacted by network delays and data redundancy.

These two validation stages in the lifecycle rely heavily on nodes being able to obtain the required data within specific time bounds, making data availability a key requirement.

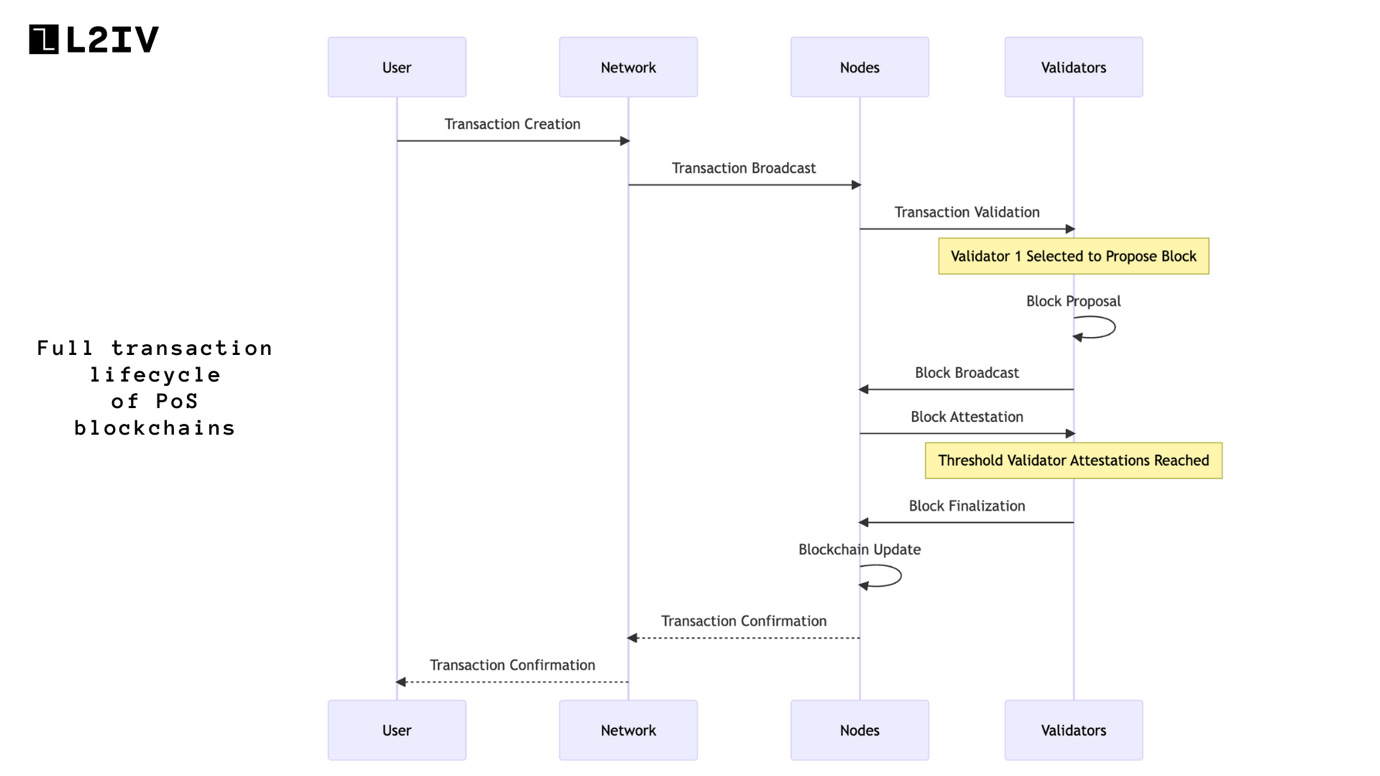

Proof-of-Stake (PoS)

Transaction Creation: The user creates a new transaction with the necessary data and digital signature.

Transaction Broadcast: The user broadcasts the transaction to the peer-to-peer network.

Transaction Validation: Validator nodes receive and verify the transaction against consensus rules.

Validator Selection: One validator node is selected to propose a new block based on the PoS algorithm.

Block Proposal: The selected validator assembles valid transactions into a new block and proposes it.

Block Broadcast: The validator broadcasts the proposed block to all nodes.

Block Attestation: Other validators check the block validity and broadcast attestations if it's valid.

Block Finalization: Once enough validator attestations are received, the block gets finalized.

Blockchain Update: Nodes add the finalized block and transactions to the blockchain.

Transaction Confirmation: Confirmation of the transaction is sent back to the user's client via the network.

The data availability for PoS chains is mainly reflected in two stages

Transaction Validation Stage:

Validators need to retrieve all relevant data to fully validate transactions. This includes account balances, signatures, contract state, etc.

Data needs to be available to validators within a time limit to complete validation before proposing blocks.

If retrieval from other nodes takes too long, transactions cannot be properly validated, reducing availability.

Factors like network latency, data replication, validator performance affect ability to obtain data on time.

Block Attestation Stage:

After block proposal, validators need to verify the block data to attest its validity.

This requires retrieving block contents, validator signatures, previous block info to confirm chain continuity.

Delayed data retrieval delays attestations, slowing down consensus and finality.

Validators require quick access to full block data to participate in consensus and avoid forks.

Data replication across validators is necessary for timely availability.

The two validation points in PoS - transaction validation and block attestation - rely heavily on quick data access. The consensus finality and security of PoS chains depend on excellent data availability amongst validators.

In both PoW and PoS chains, new nodes joining the network need to synchronize by obtaining the latest blockchain state - which includes transactions, account balances, contract data, etc.

This synchronization process relies heavily on data availability. The new node depends on timely retrieval of up-to-date data from existing nodes on aspects like:

Obtaining full block headers and block contents to reconstruct the current blockchain state. Any missing blocks will result in failed synchronization.

Retrieving all recent validated transactions to update account balances and contract storage to the latest state.

Getting updated contract code and contract storage values to execute transactions.

Receiving up-to-date validator information for consensus participation in PoS chains.

Any significant delays in retrieving the necessary data will stall the synchronization process. The node cannot fully join the network and participate in validation without synchronizing its state.

Efficient data synchronization enables the smooth onboarding of new nodes and continuous availability of the latest validated blockchain state data - which is essential for transaction validation, block formation, and consensus in both PoW and PoS architectures. The synchronization process represents a key phase where excellent data availability is necessary for blockchain networks to maintain high participation and transaction throughput.

For the entirety of the Data Availability series, we are only going to focus on the PoS ecosystem, especially the Ethereum Layer 1 chain.

Now that we have a better understanding of how data availability fits into the transaction lifecycle, here’s one question we might need answers for:

Is DA in Ethereum different from DA in L2s?

There are some key differences between how data availability works on Ethereum versus in layer 2 solutions.

On Ethereum, full transaction data and block history is stored directly on the blockchain. All nodes have the ability to reconstruct a valid state by processing all transactions from the genesis block. However, this requires significant storage and leads to scalability issues.

In Layer 2 solutions like rollups, transaction data is posted to Ethereum but typically not fully stored long-term. Transaction execution is offloaded to an optimized sequencer. This sequencer produces only proof data on-chain. The actual transaction data remains off-chain. Only block headers and state roots are stored on layer 1. This saves storage space but requires alternative methods to ensure availability.

For optimistic rollups, all transaction data must be posted on-chain so any party can reconstruct the state and submit fraud proofs if invalid. But full history storage isn't necessary.

ZK rollups use validity proofs, so transaction data itself doesn't need to be posted on-chain. However, data must still be made available off-chain so users can determine their account state.

This transaction data still needs to be available for users to interact with the rollup and for fraud proof generation. But since it's off-chain, there is a risk of the sequencer censoring or limiting access to this data.

Hence,

In Ethereum, data availability is on-chain and enforced inherently.

But in rollups, data availability relies on cryptographic verification via data availability proofs to prevent censorship by sequencers.

This difference in how data is validated, stored, and posted on-chain creates the existing problem we see with the emergence of rollups: Data Availability Problem.

The crux of the "data availability problem" in layer 2 solutions is how data is validated, stored, and posted on-chain. The sequencer is not incentivized to make all the data available, like miners/validators in layer 1s. So, additional mechanisms are needed to ensure availability.

We will discuss the Data Availability Problem in detail in part 2 of this series.

The reason for this is that we need to have a basic understanding of, despite having multiple rollups, why do still need proper data availability solutions:

Optimistic rollups require transaction data to be posted on-chain so any party can reconstruct the rollup's state and submit fraud proofs if invalid transactions are detected. Full data availability is essential for optimism. Without data availability, invalid state transitions could go unnoticed since nodes cannot actively monitor the rollup for fraud. The raw transaction data must be accessible on-chain for the security of optimism to work.

ZK rollups, on the other hand, use validity proofs to guarantee correctness. Transaction data itself does not need to be posted on-chain. However, data must still be made available off-chain. This off-chain availability allows users to determine the true state of their accounts in the rollup from the raw transaction inputs and outputs. Though validity is proven, data availability remains necessary for users to derive the actual state. If transaction data was unavailable in a ZK rollup, funds could be provably sent to a user's account, but that user would be unable to determine their updated balance. The data must exist for state derivation.

The foundational dependence on data availability highlights how pivotal it is to decentralized infrastructure. Data availability provides the bedrock for cryptographic proofs and fraud detection schemes to function securely. Both rollup types optimize data availability for scalability rather than full replication. However, they underscore that availability remains imperative even with advanced cryptography and proofs.

But how do we understand the rollup’s data availability requirements?

The answer lies in understanding the different cryptographic proofs used by both types of rollups.

Optimistic rollups rely on fraud proofs, which means nodes actively monitoring transaction data for invalid state transitions and submitting fraud proofs if detected. This mechanism requires full data availability for nodes to observe the raw transaction data and construct fraud proofs as needed. The proofs depend on accessible data to function properly.

ZK rollups use validity proofs that are generated for every block to cryptographically prove correctness. However, the validity proofs themselves do not convey the actual ledger state. They prove the transactions are error-free but don't reveal the resulting user account balances.

The raw transaction inputs and outputs must still be made available for users to determine the true state of their accounts and holdings from the source data.

Understanding the different proofs highlights why both rollup types fundamentally depend on data availability, despite their contrasting validation approaches.

The proofs themselves do not provide the actual ledger state - they require the underlying data availability layer for the cryptography to be meaningful and useful in deriving reality.

In both cases, the cryptographic proofs rely on an underlying foundation of data availability in order to be meaningful.

Fraud proofs use data availability to detect and prove misconduct.

Validity proofs depend on data availability to derive state.

The proofs provide transaction validity but require accessible data to anchor that validity to economic reality. Only with data availability can users hold systems accountable and independently audit the state.

Explicitly noting the validation methods provides clearer insight into why data availability remains an absolute necessity in both optimistic and ZK rollups. The proofs operate on and infer meaning from that base data layer.

Now, we can provide another definition for data availability: the bridge between the symbolic logic of validity proofs and fraud proofs, and the actual account balances and positions they aim to secure. Data hooks the math into economic reality.

This helps explain why so much research focuses on innovating data availability schemes using methods like erasure coding, data availability sampling, sharding, and off-chain storage with on-chain verification. Scaling data availability unlocks the scalability of advanced layer 2 constructions that leverage fraud or validity proofs.

Ultimately, rollups reveal that decentralized scalability hinges on data availability scaling more than raw throughput gains. Sufficient data availability unlocks the benefits of layer 2 innovations.

So, what does Data Availability mean to you as a user?

As a user, data availability ensures you can always verify the current state of your account and the overall ledger. Without guaranteed data availability, you could have on-chain assets (crypto, NFTs, SBTs, etc.) provably sent to your account but be unable to determine your updated balance.

When you send a transaction, data availability means the network has the information needed to validate your transaction and include it in a block. Your transaction data has to be distributed to all nodes so they can reach a consensus on the order of transactions.

When someone sends funds to your account, data availability means you can obtain the details of that transaction to confidently determine your new account balance. The raw data of blocks and transactions needs to be accessible on request.

If there is poor DA on the chain you interact with, you can't rely on explorers or wallets to provide accurate information about your account state. They are dependent on accessing transaction data to derive up-to-date balances.

DA gives you the confidence that your on-chain assets and transactions will remain verifiable on the decentralized ledger even if some nodes go offline. The data persists and remains accessible in a highly distributed manner.

Without any DA guarantees, it only takes a few unreliable nodes to prevent you from verifying your personal account state or retrieving your transaction history. DA makes sure your funds remain under your sole control. It's essential for your ability to audit the ledger yourself.

What does data availability mean for a rollup?

We have discussed this in detail before, but for a rollup, DA is critical for validating state transitions and enabling nodes to produce new blocks. Without access to complete transaction data, the rollup cannot function securely.

DA in rollups is essential for open participation. For ZK rollups specifically, even though validity proofs guarantee correctness, data availability is still necessary for users to determine their account state. The raw transaction inputs and outputs must be accessible. DA is what makes rollups more than just an optimization. Combined with fraud proofs or validity proofs, it provides the basis for rollups to offer security and decentralization advantages over traditional layer 2 designs. Rollups show that scalability in a decentralized context is less about raw throughput and more about data availability scaling.

Conclusion

And that wraps up this primer on data availability. We covered a ton of ground.

Data availability is the critical foundation underlying scalability and decentralization in blockchains. As we explored, it enables transaction validation, consensus, state derivation, and synchronization across networks.

For users, data availability provides confidence that account balances and ownership are verifiable on-chain. It ensures transactions persist securely in a decentralized manner.

Rollups demonstrate that raw throughput is secondary - innovative data availability schemes unlock the capabilities of advanced cryptographic proofs. Fraud and validity proofs rely on accessible data to anchor their security guarantees.

Progress in data availability research is thus pivotal to the growth of Web3 infrastructure. Methods like erasure coding, sharding, and off-chain availability with on-chain verification contain immense promise.

In Part 2 and Part 3, we will dive deeper into Data Availability problems and the layers building to solve the problem.

Find L2IV at l2iterative.com and on Twitter @l2iterative

Author: Arhat Bhagwatkar, Research Analyst, L2IV (@0xArhat)

References:

Disclaimer: This content is provided for informational purposes only and should not be relied upon as legal, business, investment, or tax advice. You should consult your own advisors as to those matters. References to any securities or digital assets are for illustrative purposes only, and do not constitute an investment recommendation or offer to provide investment advisory services.